Blogs

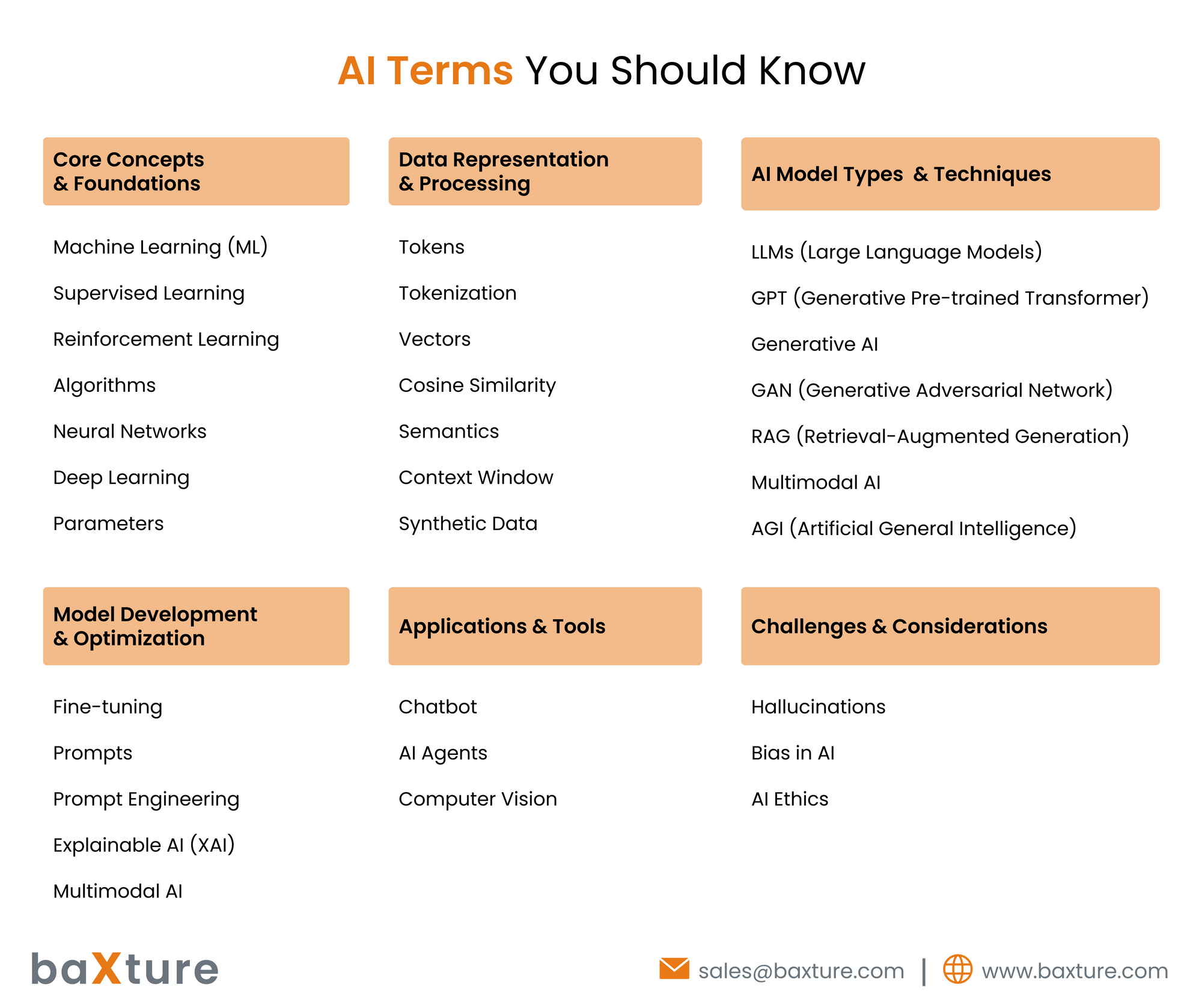

AI Terms You Should Know

Artificial Intelligence (AI) is rapidly transforming industries, redefining productivity, and reshaping how we interact with technology. Yet, for many, the terminology can seem like a confusing sea of buzzwords. Whether you're a curious beginner, a tech enthusiast, or a professional looking to keep pace, understanding the core AI vocabulary is essential. This guide unpacks the most important AI terms you need to know, with clear explanations that demystify the jargon.

1. RAG (Retrieval-Augmented Generation)

RAG is a technique that combines the strengths of information retrieval and generative models. Instead of relying solely on what the AI has memorized during training, RAG retrieves relevant documents from an external database and uses them to generate more accurate, factual, and context-aware responses. It significantly reduces hallucinations in AI output by grounding responses in real, retrievable data.

2. Tokens

Tokens are the smallest building blocks of text that an AI model understands. A token can be a word, part of a word, or even punctuation, depending on how the model processes text. For example, "ChatGPT" might be broken into two tokens: "Chat" and "GPT". Token count affects processing speed, cost, and how much text a model can handle at once.

3. Tokenization

Tokenization is the process of breaking down text into tokens. This step is crucial for AI to analyze, interpret, and generate text. Proper tokenization allows the model to map input efficiently, enhancing understanding and coherence in the generated output.

4. Semantics

Semantics deals with meaning in language. In AI, semantic analysis is used to understand the meaning behind words, sentences, and context. It allows AI to distinguish between "bank" as a financial institution and "bank" of a river by understanding contextual cues.

5. Cosine Similarity

Cosine similarity measures how similar two pieces of data (usually represented as vectors) are in direction, not magnitude. It's often used in natural language processing to compare the similarity of two texts or search results. A cosine similarity of 1 means the texts are very similar.

6. Vectors

In AI, vectors are numerical representations of words, sentences, or even images. They allow models to understand relationships between different concepts. For example, the relationship between “king” and “queen” can be captured using vector arithmetic in a model’s latent space.

7. Hallucinations

AI hallucinations occur when a model generates text that sounds plausible but is factually incorrect or entirely made up. It’s one of the biggest challenges in generative AI, especially in tasks requiring factual accuracy like legal or medical advice.

8. Context Window

The context window is the limit to how much information an AI model can "see" or process at once. If a model has a 4,000-token context window, it can consider only that much input and output combined. Larger windows allow for better understanding of long conversations or documents.

9. Parameters

Parameters are the learned weights in a neural network. They define how the input data is transformed at each layer. Modern large language models like GPT-4 have hundreds of billions of parameters, enabling them to perform complex tasks and understand nuanced language.

10. Machine Learning (ML)

ML is a subset of AI focused on building algorithms that can learn from and make predictions or decisions based on data. Instead of hardcoding rules, ML models improve over time as they are exposed to more data.

11. Generative AI

Generative AI refers to systems that can create new content—text, images, music, or code—based on patterns learned from training data. Examples include ChatGPT for text, DALL·E for images, and Codex for programming.

12. Neural Networks

Neural networks are algorithms designed to mimic the structure and function of the human brain. They consist of interconnected nodes (neurons) that process input data in layers. Deep neural networks can detect complex patterns in data, powering speech recognition, image analysis, and more.

13. Deep Learning

Deep learning is a subfield of ML that uses neural networks with many layers (hence "deep"). It excels at handling unstructured data like images, audio, and text, and is behind the latest breakthroughs in language models and computer vision.

14. AI Agents

AI agents are autonomous entities capable of perceiving their environment, making decisions, and taking actions to achieve specific goals. In advanced settings, they can operate independently, solve problems, and even collaborate with other agents or humans.

15. Algorithms

An algorithm is a step-by-step set of rules for solving a problem. In AI, algorithms are used to train models, make predictions, process data, and more. The effectiveness of an AI system depends heavily on the choice and tuning of algorithms.

16. GAN (Generative Adversarial Network)

A GAN is composed of two neural networks—a generator and a discriminator—competing against each other. The generator creates fake data (e.g., images), and the discriminator evaluates it against real data. Over time, the generator improves at creating convincing data, enabling realistic content creation.

17. Explainable AI (XAI)

Explainable AI refers to methods that make AI’s decision-making transparent and understandable to humans. It is crucial for building trust, especially in critical sectors like healthcare, finance, and law, where users need to understand why the AI made a certain decision.

18. Supervised Learning

In supervised learning, models are trained on labeled datasets, meaning each input comes with a corresponding output. The model learns to map inputs to outputs, making it ideal for tasks like classification and regression.

19. Synthetic Data

Synthetic data is artificially generated data that mimics real data. It's often used when real data is unavailable, sensitive, or expensive to collect. Synthetic data helps train and test AI models while preserving privacy.

20. LLMs (Large Language Models)

LLMs are AI models trained on massive corpora of text to understand and generate human-like language. They use billions of parameters to capture complex linguistic patterns and are the backbone of modern tools like ChatGPT, Claude, and Gemini.

21. Chatbot

A chatbot is a conversational AI system that simulates human-like dialogue. Basic chatbots follow scripted flows, while advanced ones (like ChatGPT) generate responses in real-time using natural language processing.

22. Fine-tuning

Fine-tuning involves taking a pre-trained model and training it further on a specific dataset or domain. This customizes the model to perform better on specialized tasks, like legal document generation or medical diagnosis.

23. Prompts

Prompts are the inputs or questions given to a language model. A well-crafted prompt helps the AI generate more accurate, relevant, or creative responses. Prompt design plays a huge role in outcome quality.

24. Prompt Engineering

Prompt engineering is the art and science of crafting effective prompts to achieve desired results from an AI model. It’s essential for maximizing the utility of generative AI, especially when coding, writing, or answering complex queries.

25. GPT (Generative Pre-trained Transformer)

GPT is a class of LLMs developed by OpenAI. “Generative” refers to its ability to produce text, “Pre-trained” means it learns from large datasets before task-specific tuning, and “Transformer” is the neural network architecture that allows it to understand context and relationships in language.

26. Reinforcement Learning (RL)

In RL, an agent learns to make decisions by interacting with its environment and receiving rewards or penalties. It’s used in applications like robotics, game AI, and self-driving cars, where trial-and-error learning improves performance over time.

27. Bias in AI

Bias in AI occurs when models produce unfair or prejudiced outcomes due to biased training data or flawed assumptions. Addressing bias is critical to ensure equitable AI systems that don’t reinforce discrimination or inequality.

28. Computer Vision

Computer vision is a field of AI that enables machines to interpret visual information from the world—images, videos, or live feeds. It powers facial recognition, object detection, autonomous vehicles, and more.

29. AI Ethics

AI ethics encompasses the moral principles governing the design and use of AI. It includes issues such as transparency, accountability, fairness, privacy, and societal impact. Ethical AI development ensures that these powerful tools benefit everyone responsibly.

30. Multimodal AI

Multimodal AI systems can process and integrate multiple types of inputs—such as text, images, and audio—enabling richer understanding and interaction. An example is a model that can describe a photo in words or answer questions about a video clip.

31. AGI (Artificial General Intelligence)

AGI refers to a hypothetical form of AI that possesses human-like intelligence, capable of understanding, learning, and applying knowledge across a wide range of tasks. Unlike narrow AI, AGI would not need task-specific programming and could perform any cognitive task that a human can.