artificial intelligence

What Are AI Agents?

An AI Agent is a computer program/software that can think and act on its own to achieve a goal.

They are intelligent systems designed to perform tasks, make decisions, and take actions autonomously based on their understanding of goals and data.

Unlike traditional software that follows fixed instructions, AI Agents can analyze information, plan actions, and adapt to new situations without constant human input. They can communicate with other systems, learn from experience, and operate across various domain.

Such as managing schedules, analyzing business data, automating customer service, or controlling self-driving cars. In essence, an AI Agent acts as a digital decision-maker that combines perception, reasoning, and action to achieve specific objectives efficiently.

The evolution of AI systems has moved from simple rule-based programs to intelligent agents capable of reasoning, learning, and acting autonomously. In the early stages, AI systems relied on explicit instructions—they could only perform predefined tasks like solving mathematical problems or playing chess using fixed algorithms. As computing power and data availability grew, machine learning emerged, enabling systems to learn from data instead of being manually programmed. This led to the development of deep learning, where neural networks allowed machines to recognize images, understand speech, and generate human-like text. The next stage introduced AI assistants, such as chatbots and virtual helpers, that could understand natural language and assist users in real time. Today, AI has advanced into agentic systems—AI Agents that can plan, make decisions, collaborate with other agents, and execute actions across different applications without direct human control. This progression reflects a shift from static, reactive AI to proactive, goal-driven intelligence, capable of continuous learning and adaptation.

Core Concepts of AI Agents

Goal-Oriented Intelligence

AI Agents are built around the principle of goal-oriented intelligence, meaning their actions are guided by specific objectives or desired outcomes. Instead of merely responding to commands, these agents evaluate situations, plan steps, and make decisions that move them closer to achieving a defined goal. This ability makes them proactive—constantly analyzing whether their current actions are effective and adjusting strategies when needed.

Goal-driven behavior allows an AI Agent to operate intelligently in dynamic environments. It continuously observes inputs, reasons about the best course of action, and acts to minimize the gap between the current state and the goal state. For instance, a navigation agent finds the shortest route to a destination, while a business process agent might optimize workflows to reduce costs or time.

Goal specification can be explicit or implicit:

- Explicit goals are directly defined by users or systems—such as “deliver a package to location X” or “increase website traffic by 15%.”

- Implicit goals are learned or inferred from patterns, preferences, or context—like a recommendation system understanding user tastes over time or a personal assistant predicting a meeting schedule based on habits.

Together, these aspects form the foundation of how AI Agents understand purpose, plan intelligently, and act autonomously toward measurable results.

Autonomy and Supervision Levels

AI Agents differ in how independently they operate, which is defined by their level of autonomy and supervision. This determines how much control the agent has over its decisions and actions versus how much guidance it receives from humans.

Fully autonomous agents can function independently once their objectives are set. They observe their environment, make decisions, and execute actions without requiring continuous human input. These agents are capable of adapting to new situations, learning from experience, and optimizing performance over time. Examples include self-driving cars that navigate traffic on their own or robotic process automation systems that manage end-to-end business workflows.

Semi-supervised agents, on the other hand, operate with partial human involvement. They perform specific tasks autonomously but depend on human feedback, validation, or intervention for critical decisions or exceptions. For example, an AI agent that drafts legal documents may still need a human to review and approve them before submission.

The role of human oversight remains crucial, even in highly autonomous systems. Oversight ensures ethical decision-making, prevents errors in complex or sensitive environments, and maintains accountability. Humans provide strategic direction, define boundaries, and step in when the agent encounters uncertainty or moral dilemmas. In essence, effective AI systems balance autonomy with supervision—allowing agents to act intelligently while keeping humans in control of ultimate outcomes.

Environment and Context Awareness

AI Agents operate effectively only when they can sense, perceive, and interpret their environment accurately. The environment represents everything external that influences the agent’s decisions—such as data inputs, user interactions, or physical surroundings. To act intelligently, agents use sensors, data streams, or APIs to gather information, then process it through algorithms that interpret meaning and context. This process allows agents to understand not just what is happening, but also why it is happening and how to respond appropriately.

For example, a virtual assistant interprets user messages (text or voice) to determine intent, while a self-driving car senses nearby objects and road conditions through cameras and sensors. By continuously perceiving changes, the agent maintains awareness and adapts its actions to meet its goals effectively.

AI environments can be static or dynamic:

- Static environments remain fixed during the agent’s operation. All conditions are known in advance, and the environment doesn’t change unexpectedly—like solving a chess problem where all moves are predetermined.

- Dynamic environments are constantly changing, requiring agents to adapt in real time. Examples include financial trading systems reacting to market fluctuations or delivery drones adjusting to weather and obstacles.

In summary, context and environmental awareness enable AI Agents to make informed, situation-specific decisions, bridging perception with purposeful action in both predictable and unpredictable settings.

Multi-Agent Systems

Multi-Agent Systems (MAS) involve multiple AI Agents working together within a shared environment to achieve individual or collective goals. Each agent operates autonomously but can collaborate, communicate, and coordinate with others to handle complex tasks that exceed the capabilities of a single agent. These systems mirror real-world teamwork, where agents exchange information, negotiate, and divide responsibilities to improve efficiency and outcomes.

Collaboration and communication are central to MAS. Agents share data, status updates, and intentions through defined communication protocols, enabling them to align actions and avoid conflicts. For example, in a logistics network, one agent may manage inventory while another handles routing, both communicating to ensure on-time delivery. Similarly, in smart cities, multiple agents control traffic lights, energy grids, and public transport systems in sync to optimize urban flow.

Agent hierarchies and orchestration determine how multiple agents interact and are managed. In a hierarchical structure, higher-level agents oversee or coordinate lower-level ones, ensuring that local actions contribute to the overall system objective—much like a manager guiding team members. In orchestrated or decentralized systems, agents collaborate as equals, making joint decisions through negotiation or consensus mechanisms.

By integrating collaboration, communication, and hierarchy, multi-agent systems enable scalable, distributed intelligence—allowing multiple AI entities to function collectively, adapt dynamically, and achieve results more efficiently than isolated systems.

Architecture of an AI Agent

Core Components of AI Agents

AI Agents are structured into key functional layers that enable them to perceive, think, act, and learn within their environments. Each layer serves a distinct role, working together to ensure intelligent, goal-driven behavior.

1. Perception Layer – Sensors, Data Ingestion, Environment Monitoring

The perception layer is the agent’s connection to the external world. It gathers information from sensors, APIs, or data streams to understand the current state of its environment. This can include visual data, user inputs, system logs, or contextual cues. By converting raw inputs into meaningful representations, this layer allows the agent to detect patterns, identify changes, and maintain situational awareness.

2. Reasoning Layer – Decision-Making Logic, Inference Engines

The reasoning layer processes information gathered by the perception layer and determines the best course of action. It applies logic, inference rules, or probabilistic models to evaluate options and predict outcomes. This is where the agent “thinks,” weighing alternatives to choose the most effective strategy. For instance, a delivery agent might reason about traffic and weather to plan an optimal route.

3. Action Layer – Execution Mechanisms, System Integrations

The action layer translates decisions into concrete actions. It executes commands, interacts with systems or devices, and performs tasks to achieve objectives. Whether it’s sending messages, updating databases, or controlling a physical robot, this layer ensures that the agent’s intentions are carried out accurately and efficiently.

4. Learning Layer – Feedback Loops, Reinforcement Learning, Adaptation

The learning layer allows the agent to improve performance over time. Through feedback loops, it evaluates past actions, measures outcomes, and refines its decision-making process. Using techniques such as supervised learning, unsupervised learning, or reinforcement learning, the agent adapts to new environments, corrects mistakes, and enhances efficiency through experience.

Together, these four layers form the foundation of intelligent behavior—where perception provides awareness, reasoning drives decisions, action delivers execution, and learning ensures continuous improvement.

Human Layer

The Human Layer plays a critical role in ensuring that AI Agents operate safely, ethically, and effectively within human-defined boundaries. This layer integrates Human-in-the-Loop (HITL) principles—where human expertise, judgment, and oversight are embedded into the agent’s decision-making process. Instead of allowing full automation, HITL systems involve humans at key stages such as data labeling, decision validation, and exception handling. This approach combines the speed and precision of AI with the contextual understanding and moral reasoning of humans.

Human-in-the-Loop (HITL) systems are particularly valuable in complex or high-stakes environments like healthcare, finance, and law enforcement, where autonomous actions without supervision could lead to errors or ethical concerns. Humans guide the agent’s learning, refine its reasoning models, and step in when the system encounters ambiguity or uncertain scenarios.

Control, feedback, and approval mechanisms within the human layer ensure accountability and transparency. Control mechanisms define when and how humans can override agent actions. Feedback mechanisms allow humans to correct or adjust the agent’s behavior based on real-world outcomes. Approval mechanisms provide checkpoints where human validation is required before the agent executes critical actions.

By maintaining this structured collaboration, the human layer ensures that AI Agents remain aligned with human goals, comply with ethical standards, and deliver decisions that are both intelligent and responsible.

Data and Knowledge Base

The Data and Knowledge Base forms the foundation of an AI Agent’s intelligence, enabling it to understand, reason, and act effectively. This layer stores and manages all the information the agent relies on—from raw data inputs to organized knowledge structures that support decision-making.

AI Agents use both structured and unstructured data to build situational awareness and context. Structured data includes information organized in defined formats such as databases, spreadsheets, or knowledge graphs—making it easy to search, retrieve, and analyze. Unstructured data, such as text documents, images, videos, emails, and audio recordings, provides richer, real-world context. Advanced AI models process this unstructured data using natural language processing, computer vision, and machine learning to extract meaning and insights.

To make sense of all this information, agents rely on knowledge representation models—frameworks that define how information is stored, connected, and interpreted. Common models include ontologies, which map relationships between concepts; semantic networks, which represent knowledge as interconnected nodes; and knowledge graphs, which combine structured data and relationships to enable reasoning and inference. These representations allow agents to draw conclusions, detect patterns, and make informed decisions.

In essence, the data and knowledge base is what gives an AI Agent its “understanding” of the world—transforming raw data into usable intelligence and providing the cognitive foundation for learning, reasoning, and autonomous action.

Integration Interfaces

The Integration Interfaces layer enables AI Agents to connect, communicate, and collaborate with external systems, applications, and data sources. It acts as the bridge between the agent’s internal intelligence and the outside world—allowing it to perform actions, exchange information, and trigger workflows seamlessly across digital ecosystems.

APIs (Application Programming Interfaces) are the core enablers of this integration. They allow agents to send and receive data, execute commands, and interact with other software platforms in real time. For example, a customer service AI Agent may use APIs to access CRM data, update tickets, or send automated responses through chat systems.

Workflows define how agents coordinate multiple actions across systems in a logical sequence. They enable task automation—such as approving a request, generating a report, or updating a record—based on triggers and predefined rules. Through workflow orchestration, agents can manage complex, multi-step processes without direct human input.

External system connections extend the agent’s capabilities beyond its native environment. These may include integrations with databases, cloud platforms, IoT devices, or enterprise applications like ERP and HRMS systems. By establishing secure, real-time connections, agents can collect insights, perform transactions, and collaborate across digital infrastructure.

In summary, integration interfaces empower AI Agents to function as connected, action-oriented entities—linking intelligence with execution by bridging internal reasoning mechanisms and external operational systems.

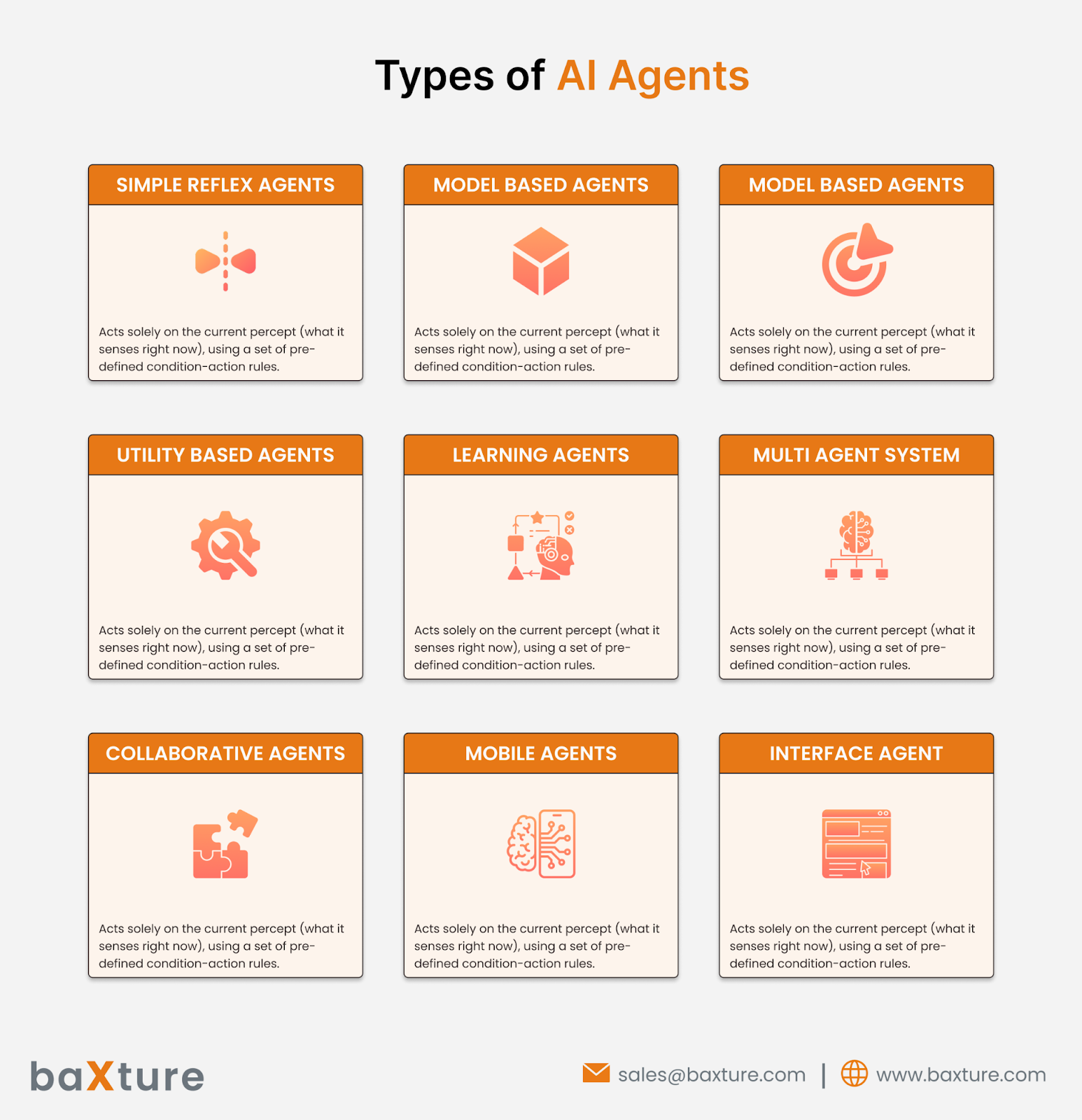

Types of AI Agents

AI Agents can be categorized based on how they perceive, decide, learn, and act. Each type reflects a different level of intelligence, autonomy, and adaptability in achieving goals.

Reactive Agents – Respond to Stimuli without Memory or Planning

Reactive Agents operate purely on immediate input. They respond directly to environmental stimuli without relying on memory, past experiences, or future planning. Their behavior is rule-based—specific inputs trigger predefined outputs.

Example: A thermostat adjusting temperature based on current readings, or a simple game character that moves only when an obstacle appears.

These agents are fast and reliable in predictable settings but lack the ability to adapt or learn from experience.

Deliberative Agents – Plan Actions Based on Internal Models

Deliberative Agents possess an internal model of the world that helps them reason, predict outcomes, and plan actions before executing them. They consider multiple options, evaluate consequences, and choose the most effective path toward a goal.

Example: A route-planning AI that analyzes traffic data to find the shortest travel path.

These agents are intelligent and strategic but may be slower due to complex computation and planning processes.

Learning Agents – Improve Through Experience and Feedback

Learning Agents have the capability to adapt and evolve by learning from data, feedback, and prior actions. They continuously refine their performance through methods such as reinforcement learning, supervised learning, or experience replay.

Example: A recommendation system that improves suggestions based on user behavior over time.

Such agents become more efficient and accurate the longer they operate, as they adjust to new patterns and changing environments.

Collaborative Agents – Work with Other Agents or Humans

Collaborative Agents are designed for team-oriented environments, where coordination and communication are key. They interact with other agents or humans to share information, distribute tasks, and achieve collective goals.

Example: Multiple delivery drones coordinating to cover different routes efficiently, or a digital assistant working alongside human employees in a support team.

These agents emphasize cooperation, negotiation, and shared problem-solving.

Hybrid Agents – Combine Reactive, Deliberative, and Learning Traits

Hybrid Agents integrate the strengths of reactive, deliberative, and learning models to create a balanced, flexible system. They can respond instantly to real-time changes (reactive), plan ahead strategically (deliberative), and improve continuously through experience (learning).

Example: An autonomous vehicle that reacts instantly to sudden obstacles, plans routes intelligently, and refines driving performance over time.

Hybrid Agents represent the most advanced form of intelligent behavior—adaptive, goal-driven, and context-aware across multiple situations.

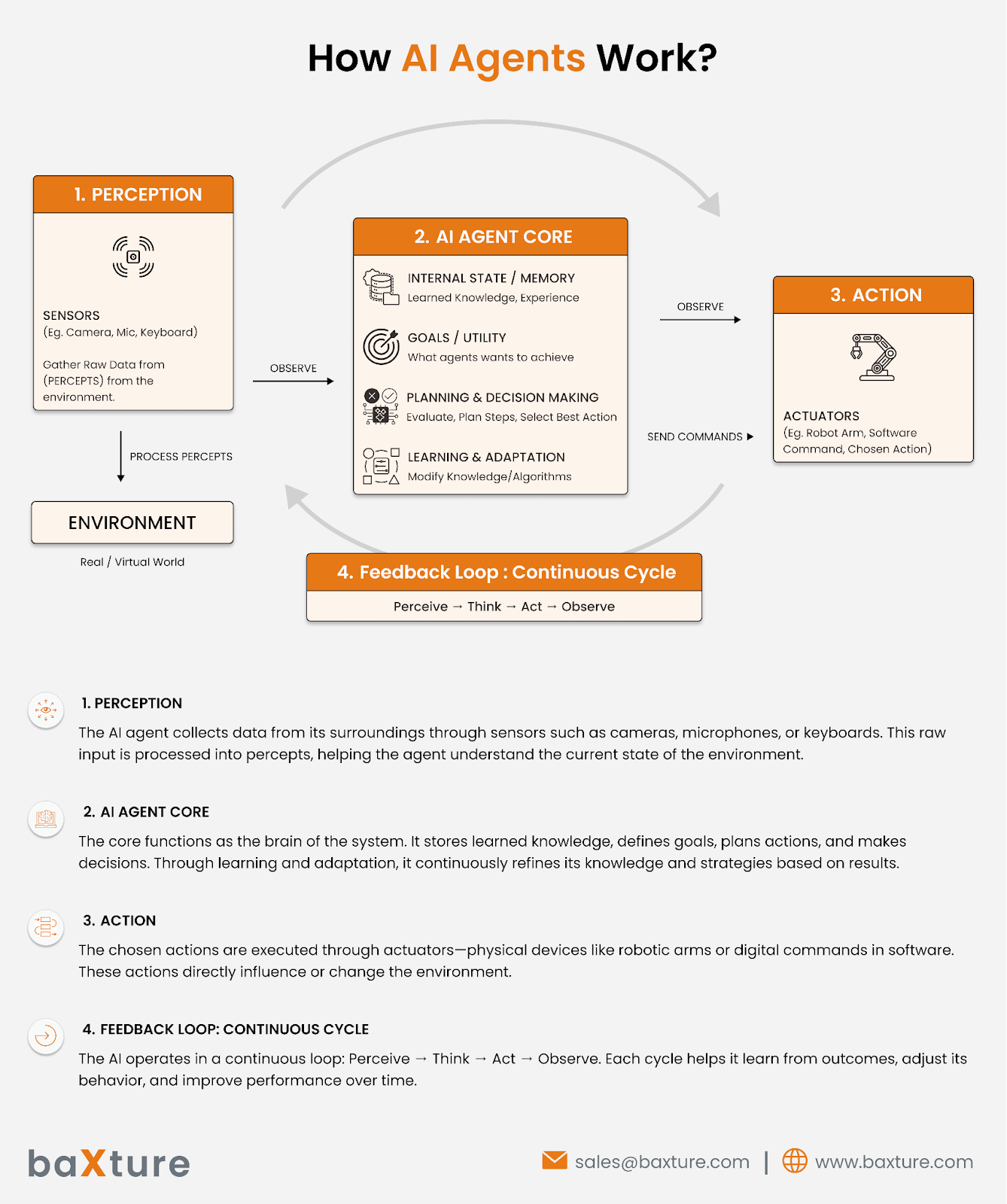

How AI Agents Work (Step-by-step)

AI Agents operate through a repeating cycle that turns raw inputs into purposeful actions and continuous improvement. First, the agent perceives the environment by collecting data from sensors, APIs, or user inputs. Next, the agent interprets the data to build context, extract intent, and form an internal representation of the current state. Based on that representation and the agent’s goals, the agent decides on a goal-directed action using rules, models, planning, or learned policies. The chosen action is then executed autonomously via system calls, device controls, or API interactions. After execution, the agent observes the outcome and compares results to expected goals; this feedback drives learning mechanisms that adjust future behavior (for example, updating model weights or changing a decision rule). The cycle repeats, enabling continuous feedback and optimization so the agent becomes more accurate, efficient, and robust over time.

Step-by-step breakdown

- Perceive environment (data input): Gather signals — sensor readings, text, images, logs, user events, or API data.

- Interpret data (context understanding): Clean, transform, and analyze inputs to detect patterns, intents, and the current state.

- Decide on goal-directed action: Use reasoning, planning, or learned policies to choose the best action that moves toward the goal.

- Execute task autonomously: Perform the action through integrations, device commands, or user-facing outputs.

- Learn from outcome and adjust behavior: Measure results, compute error or reward, and update models or rules accordingly.

- Continuous feedback and optimization cycle: Repeat the loop, refining perception, reasoning, actions, and learning for better future performance.

This loop—perceive → interpret → decide → act → learn—is the practical engine behind intelligent, adaptive AI Agents.

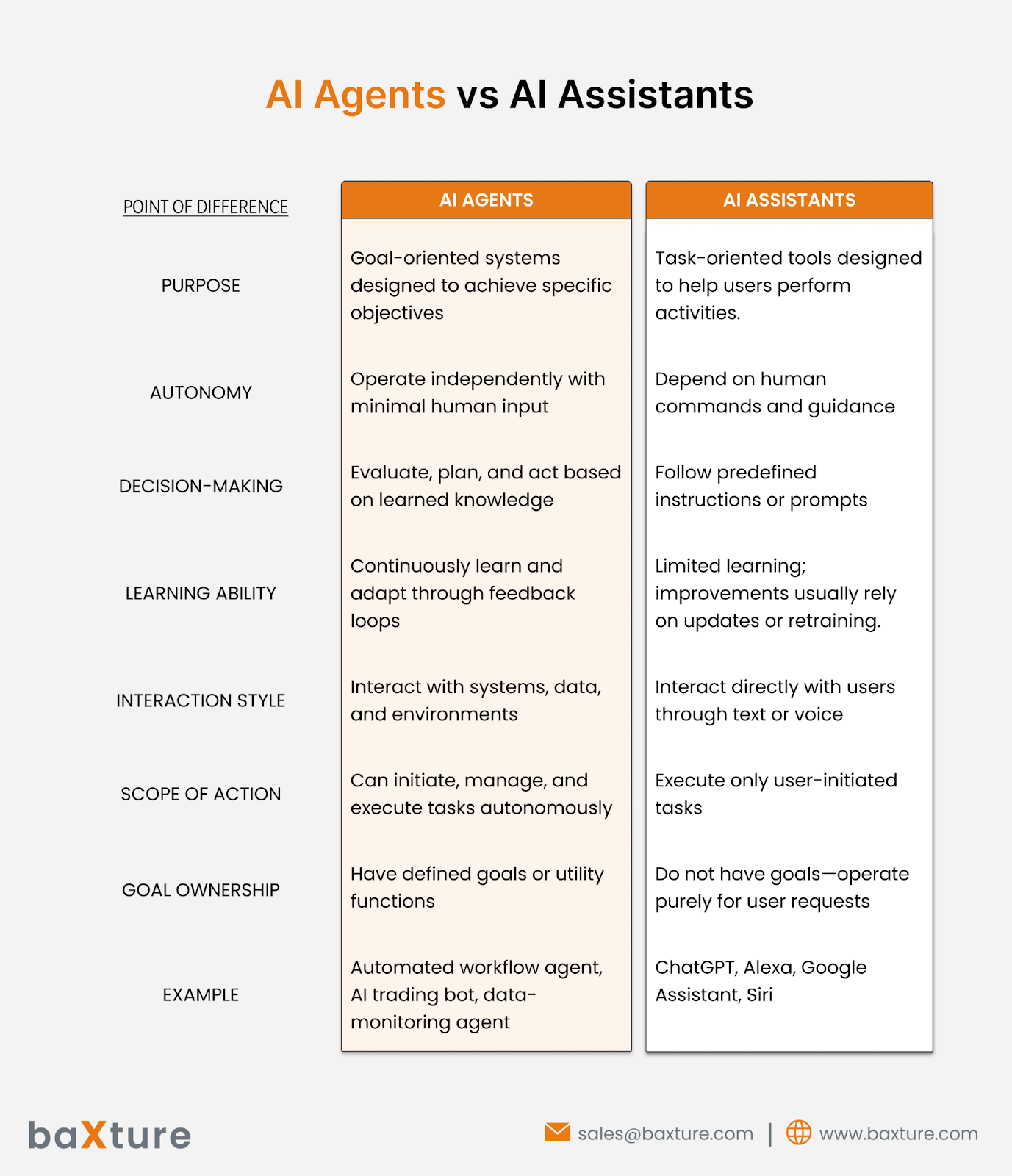

AI Agents vs AI Assistants

Definition and Core Difference

AI Assistants are designed to support users by performing predefined tasks based on direct commands or requests. They rely on user input to function and focus primarily on interaction, task automation, and information retrieval.

AI Agents, on the other hand, are autonomous systems capable of understanding goals, planning actions, and executing tasks independently. They do not just assist but act on behalf of the user, often coordinating with other systems or agents to achieve objectives without continuous supervision.

Comparison Table

Use Case Distinctions

- AI Assistant Example:

A virtual assistant that responds to “Schedule a meeting for tomorrow at 10 AM” and creates a calendar entry. - AI Agent Example:

A project management agent that identifies meeting conflicts, reschedules automatically, sends reminders, and updates dependent tasks—without being told. - AI Assistant in Business:

Helps employees retrieve data or draft emails on request.

- AI Agent in Business:

Monitors KPIs, detects risks, and autonomously initiates corrective actions to maintain project timelines.

Use Cases of AI Agents

AI Agents are transforming industries by taking on complex, repetitive, and decision-driven tasks that traditionally required human effort. Their ability to act autonomously, learn continuously, and adapt to dynamic environments makes them valuable across multiple business domains.

1. Business Operations: Task Automation, Data Monitoring, Report Generation

AI Agents streamline internal operations by automating repetitive workflows, monitoring business data in real time, and generating analytical reports. They can identify inefficiencies, alert decision-makers to performance deviations, and optimize resource allocation—reducing manual effort and improving operational accuracy.

2. Customer Service: Self-Learning Support Bots, Ticket Resolution Agents

In customer support, AI Agents act as intelligent service representatives that learn from interactions. They handle inquiries, escalate complex cases, and even predict customer needs based on behavior. These agents reduce response times, enhance customer satisfaction, and continuously improve through feedback loops.

3. Finance: Algorithmic Trading, Fraud Detection

AI Agents in finance autonomously execute high-speed trading decisions, analyze market trends, and detect anomalies indicative of fraudulent activity. By processing vast financial data in real time, they enable firms to react instantly to market shifts and prevent potential risks.

4. Healthcare: Diagnostic Decision Support, Patient Monitoring

Healthcare AI Agents assist clinicians by analyzing medical data, supporting diagnostic decisions, and continuously monitoring patient vitals. They detect early signs of deterioration, recommend treatment options, and ensure timely interventions, enhancing both accuracy and patient care outcomes.

5. IT & DevOps: Infrastructure Management, Automated Deployments

AI Agents manage complex IT ecosystems by overseeing infrastructure health, predicting failures, and automating software deployments. They can detect configuration issues, initiate self-healing actions, and ensure systems remain stable and secure without human intervention.

6. Manufacturing: Predictive Maintenance, Robotic Process Automation

In manufacturing, AI Agents analyze machine performance to predict maintenance needs before breakdowns occur. They also coordinate robotic processes, manage production schedules, and adapt workflows to changing conditions—minimizing downtime and maximizing output.

7. Marketing & Sales: Lead Scoring, Behavior Prediction, Campaign Optimization

AI Agents in marketing analyze consumer behavior to score leads, personalize campaigns, and optimize engagement strategies. They monitor performance metrics in real time, adjust ad spending, and identify high-conversion opportunities automatically—driving smarter marketing decisions and improved ROI.

Building and Deploying AI Agents

Defining the Objective

The foundation of an AI agent begins with a clearly defined purpose. Determine the exact goal or problem the agent is meant to solve—whether it’s automating workflows, providing customer insights, or managing system operations. A precise objective guides architecture, training data, and evaluation metrics.

Selecting the Environment

Define the operational context where the agent will function—such as enterprise systems, customer support portals, IoT networks, or cloud infrastructure. This determines the complexity of integration, data access, and communication patterns.

Choosing the Framework or Platform

Select a development framework that aligns with the agent’s functionality and scalability needs. Options like LangChain, AutoGPT, CrewAI, and OpenDevin provide modular tools for building autonomous, multi-step, and API-connected agents.

Integrating with Data Sources and APIs

Connect the agent to structured and unstructured data sources—databases, CRMs, knowledge graphs, or document repositories. API integrations enable real-time access to business systems, ensuring accurate decision-making and contextual understanding.

Training and Testing the Agent

Develop and fine-tune the agent using supervised learning, reinforcement learning, or rule-based models. Test against controlled environments to validate response accuracy, decision consistency, and goal completion efficiency.

Deployment and Monitoring

Deploy the agent within production environments or sandboxed systems using scalable cloud or on-premise infrastructure. Establish monitoring dashboards to track agent activity, task success rates, and system performance in real time.

Continuous Learning and Improvement

Enable feedback loops for adaptive learning. The agent should analyze outcomes, user interactions, and performance metrics to self-improve over time—enhancing accuracy, adaptability, and decision-making with every iteration.

Agentic AI Platforms and Frameworks

Overview of Popular Agentic AI Platforms

Agentic AI platforms provide the foundational infrastructure for building, orchestrating, and deploying autonomous agents. These platforms enable agents to reason, plan, and act across various environments while maintaining contextual awareness. Popular platforms like LangGraph, CrewAI, AutoGen, and OpenDevin focus on modular architecture, allowing developers to design agents capable of dynamic decision-making, multi-step workflows, and cross-system communication.

Key Components in Agent Orchestration Frameworks

Agentic frameworks typically include several core components:

- Agent Core: Defines the agent’s behavior, objectives, and decision logic.

- Memory Module: Stores and retrieves past interactions or knowledge for contextual continuity.

- Planner: Breaks down complex goals into manageable tasks or sub-goals.

- Executor: Handles task completion through actions, tool use, or API interactions.

- Coordinator/Orchestrator: Manages communication among multiple agents or systems to ensure goal alignment and task synchronization.

Role of LLMs in Powering Agents

Large Language Models (LLMs) act as the cognitive backbone of agentic systems. They interpret natural language inputs, generate contextually relevant responses, and make logical inferences. LLMs empower agents to understand intent, reason through ambiguity, and autonomously plan actions based on environmental feedback or user prompts. Their adaptability enables continuous improvement as they interact with more data and real-world scenarios.

Example Frameworks

- LangGraph: A framework for creating multi-agent systems with graph-based control flows and contextual state management.

- CrewAI: Focuses on collaborative agent ecosystems where multiple agents coordinate to complete complex tasks.

- AutoGen: Provides tools for dialogue-based agent creation, supporting human-agent collaboration and multi-agent reasoning.

- OpenDevin: Designed for autonomous software development, enabling agents to plan, code, test, and debug applications.

Governance and Ethical Considerations

Transparency and Explainability

Transparency and explainability are essential to build trust in AI agents. Each decision or action taken by an agent should be traceable and justifiable. Explainable AI mechanisms help users understand how inputs are processed, what reasoning led to specific outcomes, and whether those outcomes align with organizational objectives. Clear documentation and audit trails ensure accountability and foster responsible AI adoption.

Human Oversight

While AI agents can operate autonomously, human oversight remains critical to maintain accountability. Supervision ensures that agents act within ethical and operational boundaries, especially when handling sensitive or high-impact tasks. Human-in-the-loop systems enable intervention when decisions deviate from expected outcomes, balancing autonomy with human judgment and responsibility.

Bias and Fairness

AI agents can inadvertently inherit biases present in their training data. Addressing bias involves careful data selection, continuous monitoring, and fairness auditing. By integrating ethical design principles and diverse datasets, developers can reduce discriminatory patterns and ensure equitable decision-making across demographics, markets, or user groups.

Security and Data Privacy

AI agents often process large volumes of confidential and sensitive information. Ensuring secure data handling, encryption, and controlled access is essential to prevent misuse or breaches. Privacy-focused architectures, such as differential privacy and federated learning, can further safeguard user data while maintaining the agent’s learning capabilities.

Compliance with AI Regulations

Governance frameworks must align with emerging AI laws and global standards such as the EU AI Act, GDPR, and NIST AI Risk Management Framework. Compliance ensures agents operate within legal boundaries, addressing accountability, transparency, and safety. Organizations must establish internal policies for AI governance to monitor regulatory adherence and ethical deployment.

Challenges and Limitations

Technical Complexity and Scalability

Building and managing AI agents involves high technical complexity. As agents scale across environments and interact with multiple systems, maintaining performance, synchronization, and reliability becomes challenging. Designing architectures that can handle concurrent operations, real-time decisions, and continuous learning requires significant computational and engineering resources.

Data Dependency and Quality Issues

AI agents rely heavily on data for perception, reasoning, and learning. Inaccurate, incomplete, or biased data can degrade performance and lead to unreliable decisions. Maintaining data integrity, ensuring real-time updates, and integrating diverse data sources are ongoing challenges that directly affect agent accuracy and behavior.

Interpretability and Debugging Challenges

As agents make autonomous decisions based on deep learning or reinforcement models, understanding why a specific action was taken can be difficult. Limited interpretability complicates debugging and accountability. Implementing explainable AI (XAI) frameworks and transparent reasoning logs is essential to identify errors and improve reliability.

Balancing Autonomy and Control

The degree of autonomy granted to an agent must align with the organization’s risk tolerance. Excessive autonomy may result in unintended actions, while excessive human control can limit efficiency. Achieving the right balance between independence and oversight is one of the most persistent design and governance challenges in agentic AI systems.

Integration Barriers with Legacy Systems

Integrating AI agents into existing technology infrastructures often faces compatibility and security constraints. Legacy systems may lack APIs, structured data, or modern architecture support, making seamless integration difficult. Overcoming these barriers requires middleware solutions, custom connectors, and gradual modernization strategies.

The Future of AI Agents

Shift from Reactive Automation to Proactive Intelligence

AI agents are evolving from performing predefined, reactive tasks to exhibiting proactive intelligence. Future systems will anticipate user needs, predict outcomes, and initiate actions autonomously. This shift will redefine automation—turning static task execution into dynamic, goal-driven problem solving that adapts in real time.

Rise of Multi-Agent Collaboration Ecosystems

The next generation of agentic systems will emphasize collaboration among multiple agents working toward shared objectives. These ecosystems will coordinate across domains such as business operations, supply chains, and software development, enabling distributed intelligence where agents communicate, negotiate, and collectively optimize performance.

Integration with Digital Twins, IoT, and Real-Time Environments

AI agents will increasingly operate within interconnected digital ecosystems that mirror physical environments. By integrating with digital twins, IoT devices, and real-time data streams, agents will manage predictive maintenance, logistics, and environmental monitoring with precision—bridging the gap between digital and physical operations.

Evolving Role of Humans as Orchestrators, Not Operators

As autonomy and intelligence deepen, human roles will transition from manual supervision to strategic orchestration. Humans will define objectives, validate outcomes, and provide ethical guidance, while agents manage execution and adaptation. This synergy will strengthen decision-making, creativity, and innovation across industries.

Predictions for Next-Generation Agentic AI

Future AI agents will combine reasoning, memory, and communication to function as self-improving digital entities. They will move beyond individual automation toward collective intelligence, where systems learn collaboratively and evolve continuously. With advancements in large language models, contextual reasoning, and decentralized architectures, AI agents will become integral to how organizations operate, innovate, and scale.

Conclusion

AI agents represent the next stage in intelligent system evolution—moving from static automation toward adaptive, goal-driven autonomy. Throughout this exploration, the core principles of perception, reasoning, action, and learning have defined how agents operate and interact within complex environments. From reactive to collaborative and hybrid models, AI agents are reshaping how systems think, decide, and execute tasks independently.

Their growing influence extends across industries—from customer service and healthcare to finance, IT operations, and marketing—driving efficiency and real-time decision-making. As multi-agent ecosystems, integration with digital twins, and large language model capabilities mature, AI agents will become the foundation for intelligent, self-managing enterprises.

However, with autonomy comes responsibility. Organizations must approach agentic architectures with transparency, ethical oversight, and regulatory compliance to ensure trust and fairness. The future of AI agents lies not only in their capability to act independently but in how responsibly they are designed, deployed, and governed to serve human objectives effectively.